1. Introduction

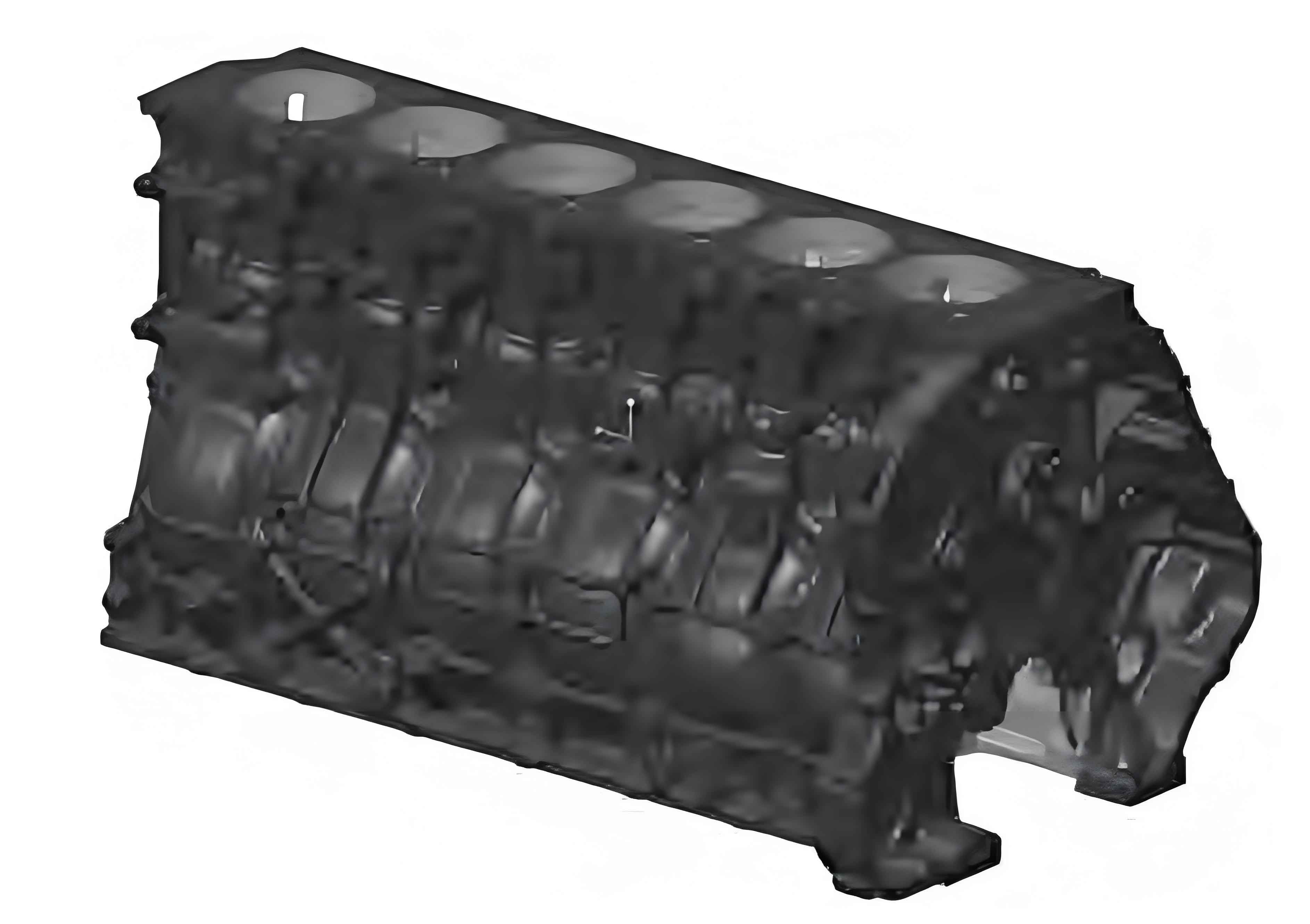

The engine cylinder block, as the core component of automotive powertrains, demands exceptionally high manufacturing precision. Surface defects such as shrinkage pores, cracks, sand holes, and contaminants inevitably occur during casting, machining, or transportation. Traditional manual inspection methods are labor-intensive, inefficient, and prone to human error. Automated defect detection systems leveraging machine vision and deep learning have emerged as promising solutions. However, a critical challenge persists: small defect datasets hinder the training of robust deep learning models.

In this study, we propose a hybrid framework combining YOLOv5 for defect detection and pix2pix (a generative adversarial network, GAN) for dataset augmentation. Our goal is to address the scarcity of labeled defect samples while achieving high accuracy and real-time performance for engine cylinder block surface inspection.

2. Related Work

Existing approaches to engine cylinder block defect detection rely heavily on traditional machine vision techniques, such as template matching and texture analysis. While these methods are computationally lightweight, they struggle with complex defect patterns and variations in lighting or surface conditions. Recent advancements in convolutional neural networks (CNNs) have improved detection accuracy but require large-scale datasets for training—a requirement often unmet in industrial settings.

GAN-based data augmentation has shown potential in synthesizing realistic defect images. For instance:

- CycleGAN and DCGAN generate images from noise but suffer from low resolution.

- pix2pix excels in image-to-image translation, making it ideal for enhancing engine cylinder block defect datasets by converting defect-free images into defective ones.

3. Methodology

Our framework integrates data augmentation, image preprocessing, and deep learning-based detection. The workflow is summarized below:

3.1 System Architecture

The hardware-software system comprises:

- Hardware: Industrial CMOS camera (Hikvision MV-CS200-10GM), ring LED lighting (MV-LRDS-170-20-W), and a computing platform.

- Software:

- Data Augmentation Module: Uses pix2pix to synthesize defect images.

- Detection Module: Employs YOLOv5 for defect localization and classification.

3.2 Dataset Augmentation with pix2pix

The pix2pix model translates defect-free engine cylinder block images into defective ones using paired training data. Its loss function is defined as:Lpix2pix=Ex,y[logD(x,y)]+Ex,z[log(1−D(x,G(x,z)))]Lpix2pix=Ex,y[logD(x,y)]+Ex,z[log(1−D(x,G(x,z)))]

Key steps:

- Input: Defect-free images and their defective counterparts.

- Training: The generator GG learns to add realistic defects, while the discriminator DD distinguishes real from synthetic images.

- Output: High-resolution synthetic defect images (2,580 × 1,944 pixels).

3.3 Image Preprocessing

To enhance defect features, we apply:

- Grayscale Enhancement: Increases contrast between defects and background.

- Band-Pass Filtering: Isolate defects by suppressing high-frequency noise and low-frequency texture variations.

3.4 Defect Detection with YOLOv5

YOLOv5 divides input images into grids and predicts bounding boxes and class probabilities simultaneously. Its loss function combines localization, confidence, and classification errors:LYOLO=λcoord∑i=0S2∑j=0B1ijobj[(xi−x^i)2+(yi−y^i)2]+λobj∑i=0S2∑j=0B1ijobj(Ci−C^i)2+λnoobj∑i=0S2∑j=0B1ijnoobj(Ci−C^i)2+∑i=0S21iobj∑c∈classes(pi(c)−p^i(c))2LYOLO=λcoordi=0∑S2j=0∑B1ijobj[(xi−x^i)2+(yi−y^i)2]+λobji=0∑S2j=0∑B1ijobj(Ci−C^i)2+λnoobji=0∑S2j=0∑B1ijnoobj(Ci−C^i)2+i=0∑S21iobjc∈classes∑(pi(c)−p^i(c))2

Training Strategy:

- Initial training on original dataset (185 defective images).

- Iterative training with synthetic data from pix2pix.

4. Experiments and Results

4.1 Dataset and Evaluation Metrics

- Original Dataset: 87 defect-free and 185 defective engine cylinder block images.

- Defect Types: Shrinkage (204 instances), cracks (41), sand holes (56), and contaminants (38).

- Evaluation Metrics:

- Precision, Recall, mAP for YOLOv5.

- Inception Score (IS) and Fréchet Inception Distance (FID) for pix2pix.

4.2 Performance of pix2pix

Table 1 compares synthetic and real defect images using IS and FID:

| Defect Type | IS (Real) | IS (Synthetic) | FID (Real) | FID (Synthetic) |

|---|---|---|---|---|

| Shrinkage | 1.47±0.15 | 1.46±0.16 | 15.55 | 15.79 |

| Crack | 1.04±0.13 | 1.05±0.15 | 15.89 | 16.29 |

| Sand Hole | 1.12±0.26 | 1.11±0.24 | 20.72 | 18.63 |

Synthetic images achieved comparable quality to real ones, enabling effective dataset expansion.

4.3 YOLOv5 Training Results

After augmenting the dataset, YOLOv5 was trained for 1,000 epochs. Key results:

Table 2: Defect Detection Performance (1,000 Epochs)

| Defect Type | Precision | Recall | mAP |

|---|---|---|---|

| Shrinkage | 92.7% | 95.7% | 98.4% |

| Crack | 97.9% | 98.3% | 96.7% |

| Sand Hole | 92.6% | 98.0% | 99.3% |

| Contaminant | 98.4% | 100% | 99.5% |

| Overall | 95.4% | 98% | 98.4% |

Key Observations:

- The model achieved 98.4% mAP, surpassing initial training (67.4% mAP).

- Detection time per image: <0.5 seconds, suitable for real-time industrial use.

5. Conclusion

Our hybrid framework addresses the critical challenge of small datasets in engine cylinder block defect detection. By integrating pix2pix for data augmentation and YOLOv5 for high-speed detection, we achieved:

- 98.4% overall mAP, demonstrating superior accuracy.

- 0.57% false detection rate in production-line validation.

- Scalability to other industrial inspection tasks with limited training data.

Future Work:

- Extending the framework to 3D surface defect detection.

- Optimizing hardware for edge deployment in manufacturing environments.