Abstract

With the upgrading of national emission standards and the demand for lightweight components, vermicular graphite cast iron has gained widespread application in the production of engine cylinder blocks and cylinder heads due to its exceptional properties. A crucial indicator in transitioning vermicular graphite cast iron components from prototypes to large-scale mass production is the consistency in casting dimensions. Given the inherently inferior machinability of vermicular graphite cast iron compared to gray iron, customers have imposed stricter requirements on dimensional accuracy and stability, thereby increasing the complexity of casting process control. This paper integrates the characteristics of high-volume assembly line production processes for vermicular graphite cast iron engine cylinder blocks and cylinder heads, examines factors influencing dimensional accuracy, and proposes solutions to enhance casting size precision. The study encompasses casting shrinkage design, core size control, core box mold maintenance, melting process optimization, and other aspects, all aimed at achieving stable dimensional accuracy compliant with DIN 1686-GTB15 tolerance requirements.

1. Introduction

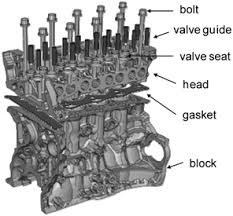

Vermicular graphite cast iron, with its unique microstructure characterized by elongated graphite flakes resembling worms, offers a compelling combination of mechanical properties, such as high strength, good ductility, and excellent wear resistance. These attributes have rendered vermicular graphite cast iron a prime material choice for critical engine components like cylinder blocks and cylinder heads. However, the transition from prototyping to mass production poses numerous challenges, particularly in ensuring consistent dimensional accuracy, which is vital for subsequent machining operations and overall product quality.

This paper delves into the various factors influencing the dimensional accuracy of vermicular graphite cast iron engine cylinder blocks and cylinder heads, exploring strategies to mitigate dimensional deviations during the casting process. By employing a comprehensive approach that encompasses casting shrinkage management, core size control, mold maintenance, and melting process optimization, this study aims to provide practical insights for achieving precise and stable casting dimensions.

2. Product and Process Overview

The engine cylinder block and cylinder head produced in this study are intended for heavy-duty truck applications, manufactured using a cold box resin sand core and green sand mold under a high-pressure molding assembly line. The annual demand for these components exceeds 100,000 tons, with vermicular graphite cast iron accounting for approximately 70% of the total production, specifically utilizing material grades RuT450 and RuT500. The minimum wall thickness of the castings is 4.5 mm, and the dimensional tolerances adhere to DIN 1686-1 GTB15 standards, as outlined in Table 1.

Given the complexity of the casting process and the stringent dimensional requirements, initial production runs experienced significant dimensional variations, prompting customer complaints and costly tool reworks. To address these issues, a series of improvements were implemented across process design, core size control, and melting operations, ultimately satisfying customer specifications.

| Dimension Range (mm) | Tolerance (mm) |

|---|---|

| 0 – 18 | ±0.85 |

| 18 – 30 | ±0.95 |

| 30 – 50 | ±1.00 |

| 50 – 80 | ±1.10 |

| 80 – 120 | ±1.20 |

| 120 – 180 | ±1.30 |

| 180 – 250 | ±1.40 |

| 250 – 315 | ±1.50 |

| 315 – 400 | ±1.60 |

| 400 – 500 | ±1.70 |

| 500 – 630 | ±1.80 |

| 630 – 800 | ±1.90 |

| > 800 | ±2.00 |

Table 1: Dimensional Tolerances Based on DIN 1686-GTB15 Standards

3. Casting Shrinkage Rate

The casting shrinkage rate, also known as the linear shrinkage rate, refers to the percentage reduction in dimensions from the initial pouring temperature to room temperature. This parameter is influenced by multiple factors, including the inherent shrinkage characteristics of the casting material, the casting structure, mold type, and gating system design. Initially, the absence of vermicular graphite cast iron-specific parameters necessitated the adoption of gray iron cylinder block and cylinder head data as a reference. Representative dimensional measurements were conducted across various positions, facilitating the identification of casting shrinkage patterns. Based on this analysis, initial shrinkage rate values were established (Tables 2 and 3). However, deviations from these predictions necessitated iterative adjustments and process corrections, ultimately leading to the development of a standardized shrinkage rate specification for vermicular graphite cast iron cylinder blocks and cylinder heads (Table 4).

| Position | Design Value (mm) | Measured Average (mm) | Shrinkage Rate (%) |

|---|---|---|---|

| Length 1 | 576 | 569.2 | 1.12 |

| Width 2 | 483.1 | 405.1 | 0.95 |

| Height 3 | 410 | 406.2 | 0.93 |

Table 2: Measured Shrinkage Rates for a Cylinder Head Trial Casting

| Position | Design Value (mm) | Measured Average (mm) | Shrinkage Rate (%) |

|---|---|---|---|

| Length 1 | 535 | 529.2 | 1.08 |

| Width 2 | 206 | 203.8 | 1.07 |

| Height 3 | 409 | 405.1 | 0.95 |

Table 3: Measured Shrinkage Rates for a Cylinder Block Trial Casting

| Product | Length Direction Shrinkage Rate (%) | Other Directions Shrinkage Rate (%) | Process Correction |

|---|---|---|---|

| Cylinder Block | 1.10 | 1.05 | Water jackets, cylinder liners, and crankshaft partitions designed separately |

| Cylinder Head | 1.20 | 1.15 | Valve seat inserts and fuel injector holes designed separately |

Table 4: Standardized Casting Shrinkage Rates for Vermicular Graphite Cast Iron Engine Components

4. Core Size Control

The dimensional accuracy of complex engine cylinder block and cylinder head castings is heavily dependent on the precision of the sand cores used in their production. Each cylinder block comprises 12 cores, while the cylinder head utilizes 14 cores, all manufactured using cold box resin sand technology and robotically assembled with an automation rate exceeding 80%. Despite the enhanced dimensional consistency offered by cold box coring, the high level of automation imposes stricter requirements on core size stability. The following subsections detail strategies for optimizing core size control.

4.1 Core Process Design

4.1.1 Coating Thickness

Cold box sand cores typically require a coating layer, which can significantly impact core dimensions. The coating thickness varies based on the coating type and concentration, necessitating careful design considerations to mitigate its influence on casting dimensions. The recommended coating thickness range is typically between 0.2 mm and 0.5 mm, determined through post-dipping and drying measurements, and tailored to specific product structures and coating materials.

4.1.2 Initial Core Strength

Automated core extraction and assembly processes demand cores with sufficient initial strength to withstand handling forces without deformation. Core strength is governed by factors such as sand composition, binder type and quantity, and can vary based on core type and application. Table 5 outlines the minimum initial strength requirements for various core types used in cylinder block and cylinder head production.

| Core Type | Minimum Initial Strength (MPa) |

|---|---|

| Main body cores | ≥ 0.7 |

| Frame cores | ≥ 1.2 |

| Water jacket cores | ≥ 1.0 |

| Intake/exhaust cores | ≥ 1.2 |

Table 5: Minimum Initial Strength Requirements for Different Core Types

4.1.3 Mating Dimensions

Given the multitude of cores and their structural variations, precise mating dimensions and positioning benchmarks are crucial for achieving accurate core assembly dimensions. A standard mating clearance of 0.15 mm is applied, with larger contours allowing for 0.3 mm to 1 mm clearances.

4.1.4 Parting Allowance

Similar to core box parting allowance, a negative allowance is designed into the core box surfaces to ensure dimensional compliance. This allowance, typically set at (0.6 ± 0.1) mm, accounts for factors such as core box deformation, sand shooting pressure, and sealing strip usage during the coring process.

4.2 Core Box Quality Control

4.2.1 Material Selection

Core box dimensional accuracy is intrinsically linked to its material properties. To ensure resistance to wear and deformation, 4Cr5MoSiV1 steel is selected for core box bodies, with QT500 cast iron preferred for sand shooting and blowing plates.

4.2.2 Machining Requirements

Precision machining equipment is essential for achieving tight tolerances, with electrical discharge machining (EDM) employed for sharp corners, and scraping techniques for mating surfaces to minimize deviations. Additional surface hardening treatments, such as carburizing or nitriding, may be applied to further enhance dimensional stability.

4.2.3 Maintenance

Regular maintenance schedules are established to mitigate wear and tear on core boxes, with on-line cleaning every 200 to 300 cores and off-line cleaning every 500 to 600 cores. Positioning pins and bushings are replaced upon reaching a wear limit of 0.2 mm. Similar maintenance protocols are adopted for molding templates, sand flasks, and fixtures.

5. Mold and Sand Control

The mold serves as the foundation for casting dimensional accuracy, influenced not only by the pattern dimensions but also by mold strength and sand properties. A high-pressure molding process ensures a mold plane hardness of ≥ 16 and a riser hardness of ≥ 11 (PFP mold hardness scale), providing sufficient rigidity to withstand casting shrinkage and expansion. Additionally, high-quality composite sands are employed to ensure optimal casting performance, with key properties maintained within specified ranges (e.g., permeability: 130 – 170; green strength: 0.12 – 0.15 MPa; and compactability: 30% – 34%).

6. Melting Process Control

Vermicular graphite cast iron production necessitates tight process control due to its narrow processing window. Variations in vermiculizer composition significantly impact iron melt shrinkage, necessitating close monitoring and adjustment of melt chemistries . Based on empirical data, a stabilized melting process was established, with magnesium and other critical elements meticulously controlled to minimize dimensional deviations.

7. Casting Deformation Control

Casting deformation, particularly prevalent in cylinder heads, stems from residual stresses generated during the solidification and cooling phases. These stresses lead to compressive and tensile deformations, resulting in dimensional distortions . Prolonged in-mold cooling times are implemented to mitigate temperature gradients and reduce deformation . For critical dimensions, additional process deformations are introduced to compensate for anticipated distortion.

8. Conclusion

The dimensional accuracy of vermicular graphite cast iron engine cylinder blocks and cylinder heads is intricately tied to various process parameters. By implementing targeted strategies across casting shrinkage management, core size control, mold and sand optimization, melting process refinement, and deformation mitigation, this study demonstrates the feasibility of achieving stable dimensional accuracy compliant with DIN 1686-GTB15 standards. Key takeaways include:

- Selecting appropriate shrinkage rates based on casting dimensions.

- Designing reasonable initial core strengths and parting allowances.

- Establishing rigorous core box material and machining standards.

- Managing iron melt chemistries to minimize dimensional deviations.

- Prolonging in-mold cooling times to reduce casting deformations.